When Your Code Says "FU35": The Hidden Hazards of Random Word Generation

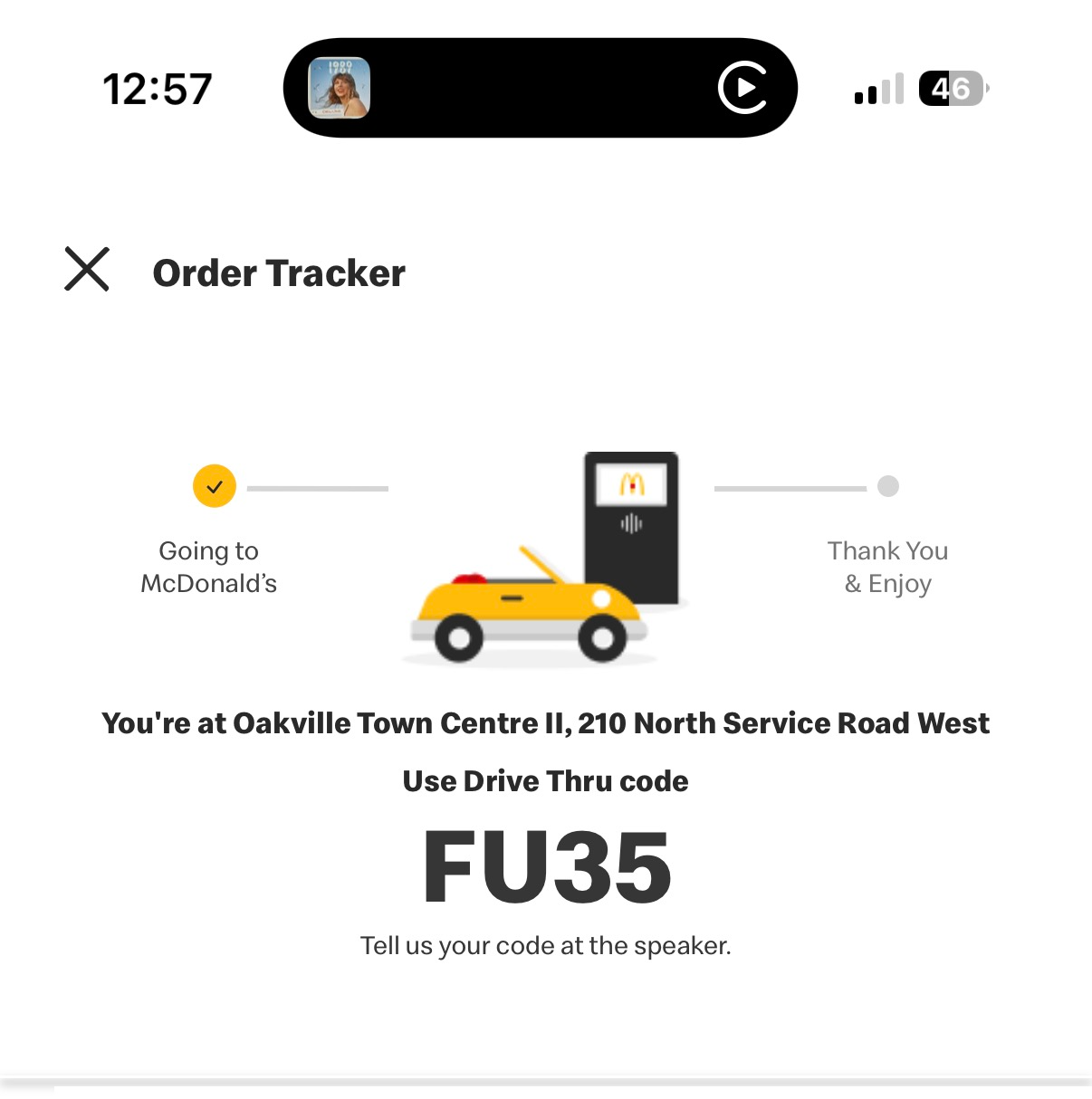

A few weeks ago, I was at a McDonald’s drive-thru when the pickup screen flashed a code meant to confirm my mobile order: FU35. You can imagine the awkwardness of saying “F U” into a speaker to a total stranger. I half expected the system to beep back in protest. At least my kids got a good chuckle in the backseat at their old man's expense.

That moment brought back memories of a similar issue I ran into years ago while developing a password generator based on XKCD’s famous comic on password strength. The premise is simple: instead of obscure strings like 7H!$rT, use a few random dictionary words - easy to remember, hard for computers to guess. My implementation, xkcd-pwgen, worked beautifully from a technical standpoint. The problem? It didn’t account for context.

When Random Isn’t Always Random Enough

Word-based code generation has become increasingly popular — for passwords, one-time codes, IoT pairing, even order pickups. Humans find “three random words” easier to recall and repeat than cryptic alphanumeric strings. But when your randomness relies on a public word list, you’re at the mercy of what’s inside it.

I learned that the hard way. The first word list I found online seemed harmless enough. Then one day, out popped a gem like mount-kids-happily or dirty-doctor-desire. Not ideal when you’re sending out-of-band passphrases to end-users - or worse, having support staff read them aloud on a recorded line.

The problem isn’t limited to amateur projects. As shown above, major platforms that generate user-facing codes - food apps, smart devices, streaming services - all face the same challenge: randomness meets reputation. No one wants to explain to a customer why their Wi-Fi setup code sounds like a late-night cable title.

Building Better Word-Based Systems

So how do we fix it? The lesson here isn’t “don’t use words,” but “curate your words.”

Here’s what I learned rebuilding my generator:

- Start with a clean, vetted corpus. Use established lists like EFF’s diceware or curated word sets designed for human use. Avoid scraping the web or grabbing random open-source word lists unless you review them first.

- Filter for appropriateness. I ran my word list through a profanity and suggestive-content filter — but also looked for unintended pairings. A single word might be fine, but “mix-and-match” can still yield embarrassing results.

- Contextual testing matters. Generate thousands of codes and spot-check them. You’ll quickly find the edge cases humans will notice before your QA bot does.

- Add structure when possible. If the use case allows, prefix with safe categories (e.g., “animal-color-noun”) or mix in known-safe numbers to limit the linguistic chaos.

It’s the same principle we use in telecom when assigning vanity numbers or IVR prompts - just because a sequence is valid doesn’t mean it’s appropriate.

Words, Randomness, and the Human Factor

Back to that McDonald’s drive-thru. Somewhere in a backend database, a harmless random number generator paired two letters and two digits without realizing what it had done to me. Machines don’t blush - people do.

The takeaway? Whether you’re designing password systems, order codes, or customer-facing identifiers, randomness is easy. Thoughtful randomness is hard.

A little human review can save a lot of human embarrassment.

Call to action:

If you build systems that communicate with people - literally or figuratively - take the time to audit your “random” outputs. A quick profanity filter, a curated word list, or even a few lines of code can prevent a thousand “FU35” moments.